Video Basics (Part 2): Colour Depth and Sub-Sampling

In Part One, Sydney Retail Manager Neale Head navigated the complex terrain of frame rates and resolution for photographers hoping to make the leap into videography. Undeterred, he continues on to explain colour depth and sub-sampling.

Defining Definition Part 2: Colour Depth & Sub-Sampling

As photographers we are all accustomed to pressing the shutter release and having our camera deliver a full uncompressed RAW image on every shot. Even at 12 or 14 fps burst speeds, professional DSLRs are capable of producing the large high-resolution files we require. But as we all know – unless you're camera is using CFExpress cards (e.g. 1DX Mark III, R3, R5 and R5 C), there's only so many shots your camera can take before the read buffer on your card fills up and your processor needs a lie down. This is the next challenge to understanding high quality digital video.

Image by Jordan Allison

A videographer needs to be recording at least 25 images every second for as long as they need to hold the shot. So how does the camera get around these limitations when dealing with so many images being recorded in such a short period of time?

Basically, the single frames of video footage are usually nowhere near as large as your stills images – they are compressed to a much smaller file size to allow your processor to capture them and to write them to your cards at the speed required.

However, the question remains: how are these files compressed and still maintain a "professional" image quality (IQ)? Firstly, the camera's processor uses a compression algorithm called a Codec (short for COmpression/DECompression) – but more on that later. Compression of any kind by its nature results in an unavoidable loss of information and therefore IQ. The processer therefore needs to know what information can be discarded while still maintaining acceptable IQ.

As we know, a digital image is made up of two components – light (luminance) and colour (chrominance). Of these two elements, light is the one we best leave as is. That's our exposure! That's the thing we work so hard to get right. We can't have some minicomputer messing with our expertise. So that leaves colour and that's where we have a lot more latitude to play with.

Bit Depth

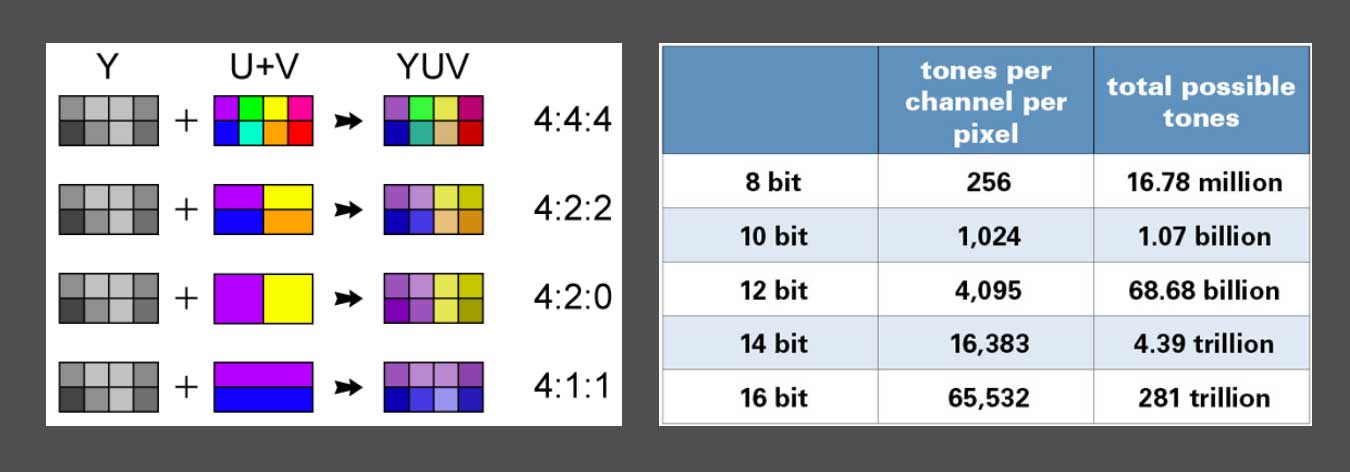

Bit depth refers to how many tones (or colours) are produced per each RED, GREEN and BLUE (RGB) pixel channel in terms of 0s and 1s (or "bits".) A RAW still image from say, a Canon EOS R5 delivers a 14-bit file which means the camera produced 16,383 tones from each channel for a total of 4.39 trillion colours. That's a lot of colour! It's also a LOT of data! Too much for most, if not all, digital video cameras to handle. Up until quite recently the standard bit depth for even high-end video cameras was 8-bit (i.e. 256 tones per channel for a total of 16.78 million colours) and that's ... well actually that's still a lot of colours, isn't it? More than you'd you need for almost any purpose and it's a lot less data and therefore much easier for the processor to churn out 25 or 50 images per second.

Image by Jordan Allison

These days 10-bit is generally accepted as the standard for a professional video file – and in fact if you're making content for a broadcast, your client most likely will insist on a 10-bit delivery. 10-bit delivers just over one billion colours and is much better for post-production processes such as colour grading.

Twelve-bit is usually found in cameras that can record in a RAW video format or it is found in resolutions under 4K (we're getting closer to that whole "pay-off" thing I teased about in the last article).

Chroma Sub-Sampling

When approaching Chroma Sub-Sampling it's important to understand three crucial things:

- It's a bit boring

- You don't need to know how it actually works; and

- Choosing the right setting is very important.

Now that we're across that let's power through, shall we?

Sub-sampling refers the process with which the processor decides exactly what colour (and light) information it needs from the sensor and then just chucks the rest away. Where bit depth tells how much colour a pixel can produce, sub-sampling is about how much the camera will actually use.

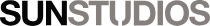

The sub-sampling value for a video file (or a video signal) is represented as a set of three numbers, each ranging from 0-4. The three most common values you'll see are 4:4:4, 4:2:2 and 4:2:0. This is because when representing sub-sampling we use a numerical shorthand that imagines a block of 8 pixels, 2 high and 4 across.

The first number indicates the number of pixels wide the sample is (4) and how many will have light – or luma – sampled (again 4 because as previously stated we don't want to lose any light information). The second number tells you how many of the pixels in the top row will have colour information taken or chroma sampled. And the third number tells you how many pixels in the bottom row will have chroma sampled.

- A 4:4:4 file has no compression, or sub-sampling, as all luma information is taken from the width of the block, and all the chroma information is taken top row and the bottom row. This would represent the largest file as it has the most information.

- A 4:2:2 file features some sub-sampling, as it has the required 4 value for luma, but in this case only half (2) of the chroma information is taken from the top and bottom row of pixels.

- In a 4:2:0 file the same occurs except no (0) chroma information is taken at all from the bottom row.

Like I said: Boring.

Here's a nice picture to liven things up:

By losing a half or more of this colour data, the processor is able to create much smaller files, but without a perceivable dip in IQ. In fact, to the human eye the difference in appearance between a 4:4:4 and a 4:2:0 image would not be noticeable at all.

Why bother with the larger files if no one can tell the difference, I hear you scream? Once again it comes back to post-production.

4:2:0 files don't really stand up to heavy colour grading like the more robust and popular 4:2:2. 4:4:4 files while large and unwieldly are popular for colour intensive processes such as chromakey filming (i.e. greenscreen) as they allow for better colour separation.

Now we understand the way resolution and colour effects the file size and therefore the load on the processor we can see how this "pay-off" between IQ and frame rates works. If we're asking the cameras to produce twice, or four, or even twelve times as many images per second then we have to accept that it will need to make sacrifices somewhere i.e. resolution and colour.

Image by Jordan Allison

For example, the old, reliable Canon C300 Mark II, as a renown "workhorse" pro video camera as it was, could cheerfully offer you a 4K DCI file in 10-bit 422 at the standard 25p but if you ask it to give you 50p then you'll be dropping down to a 2K resolution to achieve this. However, on the same camera at 25p you can select 2K or FHD resolution and be rewarded with a lovely 12-bit 444 colour. If you want to crank it all the way up to 100p then 1080p 10-bit will have to suffice, plus the processor is only going to use the centre 50 per cent of the sensor to pull this off so you're looking at a 2 x crop on your image.

And such is the eternal trade off between frame rates, resolution and colour with which all videographers must contend.

All these terms are important to understand as these are the terms you'll most commonly hear when we talk about the capabilities of video cameras and what you can deliver to clients.

The most common things you'll hear pro video shooters ask about when discussing the specs of a new camera are these very things. When they ask me "What can it do at 4K?" they want to know the top frame rate, and the colour space, so I may answer "25 or 50p 422 10-bit". "What about slow mo?" they could ask, "100p at 1080 10-bit but no crop" could be the reply.

The other things they'll ask about is what Codecs the camera uses and if it shoots in Log and which kind. And that's exactly what we'll covering in the next instalment.

See you then.

Part Three: Compression | Part Four: Color Space, Log and LUTs

Key points

- To achieve sustained shots at many frames per second (higher than stills cameras) some compression must occur.

- The main way files are compressed is by discarding colour information.

- Your bit depth determines how much colour information will be gathered from your sensor.

- Ten-bit is generally accepted as the standard for a professional video file.

- You may decide to shoot higher or lower depending on how much work needs to be done in the eventual colour grade.

- Sub-sampling processes determine which colour information will be retained.

- A 4:4:4 file has no compression/subsampling. A 4:2:0 file represents the most compression.

- Image quality will look very similar across all compression/sub-sampling options.

- Choosing a less compressed file type will give you more options in colour grading.

- If you want to shoot slow motion or a higher frame rate, you'll often need to compromise on colour and resolution.